They Requested 100 Consultants About Deepseek. One Answer Stood Out

페이지 정보

작성자 Wilford 작성일 25-02-01 04:23 조회 11 댓글 0본문

On Jan. 29, Microsoft announced an investigation into whether or not DeepSeek may need piggybacked on OpenAI’s AI models, as reported by Bloomberg. Lucas Hansen, co-founding father of the nonprofit CivAI, stated while it was tough to know whether free deepseek circumvented US export controls, the startup’s claimed coaching budget referred to V3, which is roughly equal to OpenAI’s GPT-4, not R1 itself. While some massive US tech corporations responded to free deepseek’s mannequin with disguised alarm, many builders had been fast to pounce on the alternatives the technology might generate. Open source fashions out there: A quick intro on mistral, and deepseek-coder and their comparison. To quick start, you possibly can run DeepSeek-LLM-7B-Chat with only one single command on your own device. Track the NOUS run here (Nous DisTro dashboard). Please use our setting to run these fashions. The model will automatically load, and is now ready to be used! A normal use mannequin that combines advanced analytics capabilities with an unlimited thirteen billion parameter depend, enabling it to perform in-depth knowledge evaluation and support complex choice-making processes. Our analysis signifies that the implementation of Chain-of-Thought (CoT) prompting notably enhances the capabilities of deepseek ai-Coder-Instruct models. Of course they aren’t going to inform the entire story, but perhaps solving REBUS stuff (with associated cautious vetting of dataset and an avoidance of too much few-shot prompting) will really correlate to meaningful generalization in models?

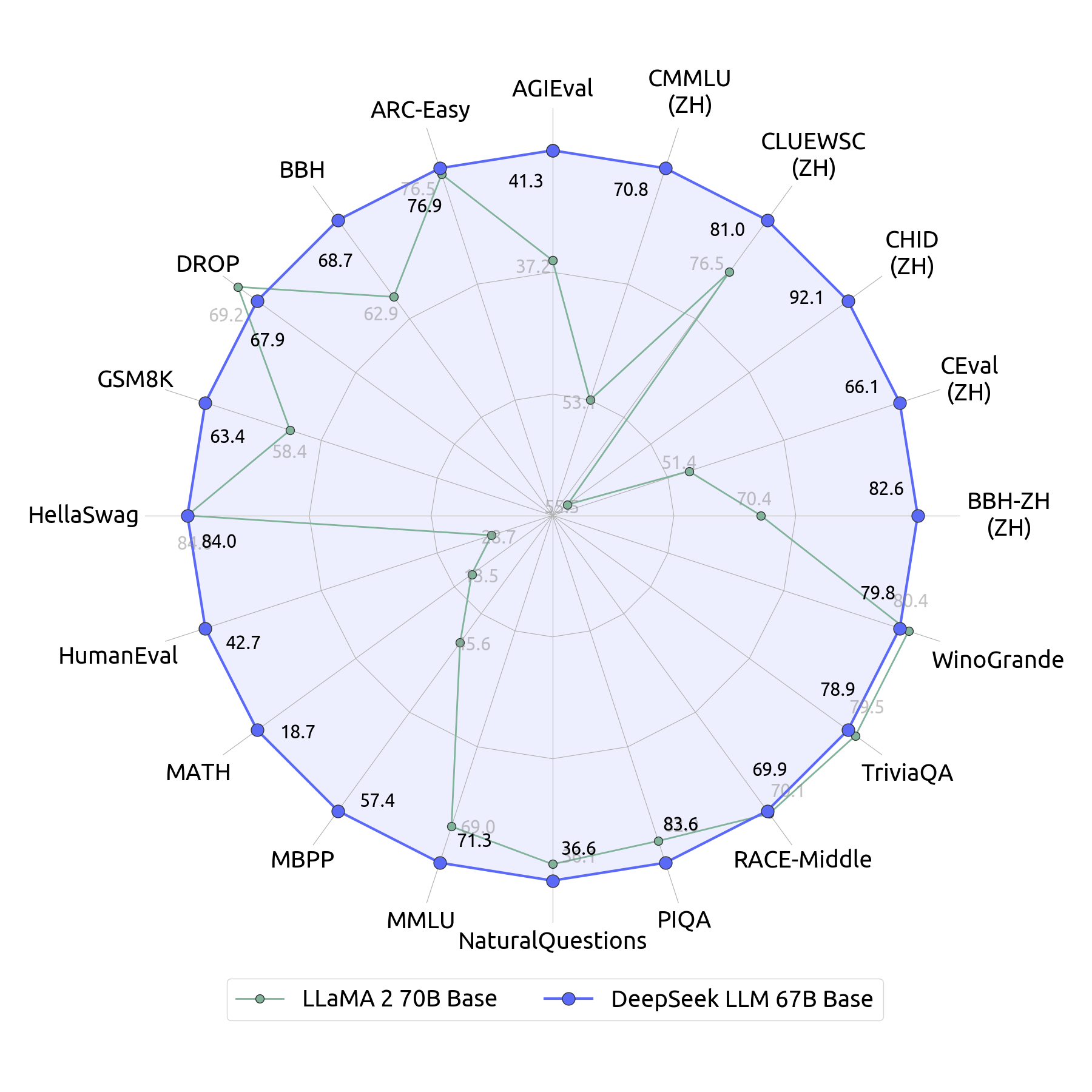

I believe open supply is going to go in an analogous manner, where open supply goes to be great at doing fashions in the 7, 15, 70-billion-parameters-range; and they’re going to be nice models. Then, going to the extent of tacit information and infrastructure that's running. "This exposure underscores the fact that the fast security dangers for AI purposes stem from the infrastructure and tools supporting them," Wiz Research cloud safety researcher Gal Nagli wrote in a weblog publish. The 67B Base mannequin demonstrates a qualitative leap within the capabilities of DeepSeek LLMs, displaying their proficiency throughout a wide range of functions. The model excels in delivering accurate and contextually related responses, making it perfect for a wide range of purposes, together with chatbots, language translation, content material creation, and more. DeepSeek gathers this huge content material from the farthest corners of the online and connects the dots to transform info into operative recommendations.

1. The cache system uses 64 tokens as a storage unit; content material lower than 64 tokens will not be cached. Once the cache is no longer in use, will probably be robotically cleared, often within a number of hours to a few days. The onerous disk cache solely matches the prefix part of the user's enter. AI Toolkit is a part of your developer workflow as you experiment with fashions and get them ready for deployment. GPT-5 isn’t even prepared yet, and here are updates about GPT-6’s setup. If the "core socialist values" defined by the Chinese Internet regulatory authorities are touched upon, or the political standing of Taiwan is raised, discussions are terminated. PCs, beginning with Qualcomm Snapdragon X first, adopted by Intel Core Ultra 200V and others. The "professional fashions" were trained by starting with an unspecified base model, then SFT on both information, and synthetic knowledge generated by an inner DeepSeek-R1 mannequin.

1. The cache system uses 64 tokens as a storage unit; content material lower than 64 tokens will not be cached. Once the cache is no longer in use, will probably be robotically cleared, often within a number of hours to a few days. The onerous disk cache solely matches the prefix part of the user's enter. AI Toolkit is a part of your developer workflow as you experiment with fashions and get them ready for deployment. GPT-5 isn’t even prepared yet, and here are updates about GPT-6’s setup. If the "core socialist values" defined by the Chinese Internet regulatory authorities are touched upon, or the political standing of Taiwan is raised, discussions are terminated. PCs, beginning with Qualcomm Snapdragon X first, adopted by Intel Core Ultra 200V and others. The "professional fashions" were trained by starting with an unspecified base model, then SFT on both information, and synthetic knowledge generated by an inner DeepSeek-R1 mannequin.

By adding the directive, "You want first to write down a step-by-step define and then write the code." following the preliminary prompt, we have noticed enhancements in performance. The reproducible code for the next analysis results will be discovered within the Evaluation directory. We used the accuracy on a selected subset of the MATH check set because the evaluation metric. This allows for more accuracy and recall in areas that require an extended context window, along with being an improved model of the earlier Hermes and Llama line of fashions. Staying within the US versus taking a trip again to China and becoming a member of some startup that’s raised $500 million or no matter, finally ends up being another factor the place the highest engineers actually end up wanting to spend their skilled careers. So a number of open-supply work is things that you may get out shortly that get curiosity and get more folks looped into contributing to them versus a number of the labs do work that's maybe less relevant within the quick term that hopefully turns into a breakthrough later on. China’s delight, nevertheless, spelled pain for several giant US technology firms as buyers questioned whether or not DeepSeek’s breakthrough undermined the case for their colossal spending on AI infrastructure.

By adding the directive, "You want first to write down a step-by-step define and then write the code." following the preliminary prompt, we have noticed enhancements in performance. The reproducible code for the next analysis results will be discovered within the Evaluation directory. We used the accuracy on a selected subset of the MATH check set because the evaluation metric. This allows for more accuracy and recall in areas that require an extended context window, along with being an improved model of the earlier Hermes and Llama line of fashions. Staying within the US versus taking a trip again to China and becoming a member of some startup that’s raised $500 million or no matter, finally ends up being another factor the place the highest engineers actually end up wanting to spend their skilled careers. So a number of open-supply work is things that you may get out shortly that get curiosity and get more folks looped into contributing to them versus a number of the labs do work that's maybe less relevant within the quick term that hopefully turns into a breakthrough later on. China’s delight, nevertheless, spelled pain for several giant US technology firms as buyers questioned whether or not DeepSeek’s breakthrough undermined the case for their colossal spending on AI infrastructure.

When you beloved this information along with you want to obtain more information about ديب سيك kindly visit the web site.

- 이전글 The Karol Bagh Diaries

- 다음글 Why Everyone is Dead Wrong About Deepseek And Why You Need to Read This Report

댓글목록 0

등록된 댓글이 없습니다.