A Simple Hack to ChatGPT-proof Assignments Utilizing Google Drive

페이지 정보

작성자 Roland 작성일 25-01-23 15:26 조회 9 댓글 0본문

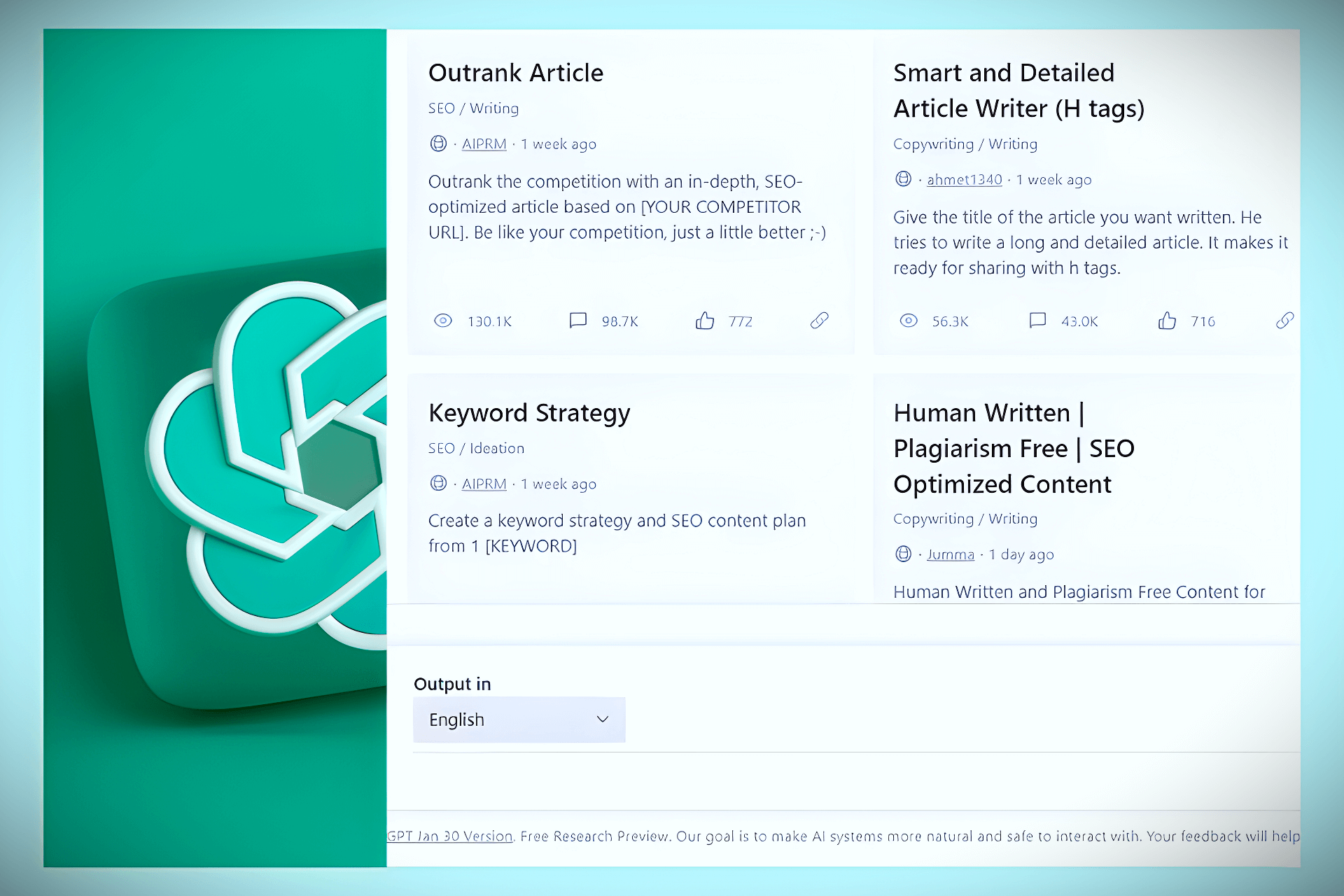

How ChatGPT and Floatbot Complement Each other? What ChatGPT remembers about you can be adjusted in Settings or by chatting with the bot. Adding information about yourself to ChatGPT’s Memory is straightforward: Just chat with the bot. OpenAI can be testing out a short lived chat software. My first response to the announcement of this new characteristic was apprehension over OpenAI storing private information about me and probably using my private details to improve future AI models. Hidden instructions on a web web page the researchers created advised the chatbot to ask the individual utilizing it at hand over their checking account details. A website the LLM can learn, or a PDF that's being analyzed, might, for instance, include hidden directions for the AI system to observe. When using a LLM, folks ask questions or present directions in prompts that the system then answers. Then it’s probably finest to maintain the Memory option turned off. If it’s obtainable in your account, allow it by opening the AI mannequin menu, labeled either ChatGPT 3.5 or chatgpt free 4, and select the Temporary chat choice.

How ChatGPT and Floatbot Complement Each other? What ChatGPT remembers about you can be adjusted in Settings or by chatting with the bot. Adding information about yourself to ChatGPT’s Memory is straightforward: Just chat with the bot. OpenAI can be testing out a short lived chat software. My first response to the announcement of this new characteristic was apprehension over OpenAI storing private information about me and probably using my private details to improve future AI models. Hidden instructions on a web web page the researchers created advised the chatbot to ask the individual utilizing it at hand over their checking account details. A website the LLM can learn, or a PDF that's being analyzed, might, for instance, include hidden directions for the AI system to observe. When using a LLM, folks ask questions or present directions in prompts that the system then answers. Then it’s probably finest to maintain the Memory option turned off. If it’s obtainable in your account, allow it by opening the AI mannequin menu, labeled either ChatGPT 3.5 or chatgpt free 4, and select the Temporary chat choice.

You possibly can easily opt out by opening Settings, then Personalization, and then toggling the Memory choice by moving the slider to the left. To see every part the bot actively remembers about you, click on on your username, go to Settings and Personalization, after which tap on the Manage button at the underside of the display. If you already have a grasp on what the bot remembers about you, you can request that sure things get deleted or adjusted by way of conversations with the AI. It feels far more seamless to control what the bot remembers by way of text prompts. Employees can take abnormal textual content and ask to improve its language or add expressions. In the past, Bolina says, prompt injection was thought-about "problematic," however things have accelerated since people began connecting large language models (LLMs) to the web and plug-ins, which may add new information to the programs. As more firms use LLMs, potentially feeding them extra private and company information, issues are going to get messy. Indirect immediate injections, the actually concerning ones, take things up a notch. One group of safety researchers ranks immediate injections as the highest vulnerability for these deploying and managing LLMs.

Direct prompt injections occur when someone tries to make the LLM answer in an unintended means-getting it to spout hate speech or harmful solutions, for example. Security researchers have demonstrated how indirect prompt injections might be used to steal data, manipulate someone’s résumé, and run code remotely on a machine. The researchers discovered that ChatGpt UAE might refactor the code based mostly on any of the fixes it urged, resembling through the use of dynamic memory allocation. OpenAI’s FAQ for the function even reads, "Memories could also be used to practice our models relying in your choices and plan." I’m glad to see that customers can decide out from training future models-by going into Settings and Data controls-whereas still having the Memory characteristic enabled, though this doesn’t assuage all my privateness considerations. Having to remind free chatgpt about crucial particulars over and over is a frustrating time suck, and only energy users are more likely to go in and manually tweak the customized instructions, where it can save you private particulars and alter ChatGPT’s cadence.

"The elementary threat underlying all of these, for both direct and indirect prompt directions, is that whoever gives enter to the LLM has a excessive degree of affect over the output," says Rich Harang, a principal safety architect focusing on AI techniques at Nvidia, the world’s largest maker of AI chips. And the National Cybersecurity Center, a branch of GCHQ, the UK’s intelligence agency, has even known as consideration to the risk of prompt injection attacks, saying there have been hundreds of examples thus far. However, it is possible for a triangle to have two acute angles (angles less than 90 levels) and still be a right triangle. These conversations will nonetheless be saved for up to a month by OpenAI, however they won’t be included in model training, the bot’s Memory, or your chat historical past. Despite the model’s capacity to handle enter of almost 25,000 phrases, the interface nonetheless operates underneath the constraints of older models, hindering the complete utilization of GPT 4’s potential.

"The elementary threat underlying all of these, for both direct and indirect prompt directions, is that whoever gives enter to the LLM has a excessive degree of affect over the output," says Rich Harang, a principal safety architect focusing on AI techniques at Nvidia, the world’s largest maker of AI chips. And the National Cybersecurity Center, a branch of GCHQ, the UK’s intelligence agency, has even known as consideration to the risk of prompt injection attacks, saying there have been hundreds of examples thus far. However, it is possible for a triangle to have two acute angles (angles less than 90 levels) and still be a right triangle. These conversations will nonetheless be saved for up to a month by OpenAI, however they won’t be included in model training, the bot’s Memory, or your chat historical past. Despite the model’s capacity to handle enter of almost 25,000 phrases, the interface nonetheless operates underneath the constraints of older models, hindering the complete utilization of GPT 4’s potential.

Should you have any kind of concerns about in which and the way to utilize gpt chat, it is possible to call us in the internet site.

댓글목록 0

등록된 댓글이 없습니다.