5 Key Ways The pros Use For Deepseek

페이지 정보

작성자 Cinda 작성일 25-02-01 22:38 조회 8 댓글 0본문

In some ways, DeepSeek was far less censored than most Chinese platforms, offering answers with keywords that might typically be shortly scrubbed on home social media. Provided that it is made by a Chinese firm, how is it dealing with Chinese censorship? And DeepSeek’s developers seem to be racing to patch holes in the censorship. I’m primarily based in China, and i registered for DeepSeek’s A.I. As the world scrambles to grasp DeepSeek - its sophistication, its implications for the worldwide A.I. I suspect succeeding at Nethack is extremely exhausting and requires a very good long-horizon context system as well as an potential to infer quite advanced relationships in an undocumented world. Why this is so spectacular: The robots get a massively pixelated picture of the world in entrance of them and, nonetheless, are in a position to robotically learn a bunch of sophisticated behaviors. Get back JSON within the format you need. But because of its "thinking" characteristic, through which this system causes by means of its answer earlier than giving it, you can still get successfully the same info that you’d get outside the good Firewall - so long as you had been paying attention, earlier than DeepSeek deleted its personal solutions.

In some ways, DeepSeek was far less censored than most Chinese platforms, offering answers with keywords that might typically be shortly scrubbed on home social media. Provided that it is made by a Chinese firm, how is it dealing with Chinese censorship? And DeepSeek’s developers seem to be racing to patch holes in the censorship. I’m primarily based in China, and i registered for DeepSeek’s A.I. As the world scrambles to grasp DeepSeek - its sophistication, its implications for the worldwide A.I. I suspect succeeding at Nethack is extremely exhausting and requires a very good long-horizon context system as well as an potential to infer quite advanced relationships in an undocumented world. Why this is so spectacular: The robots get a massively pixelated picture of the world in entrance of them and, nonetheless, are in a position to robotically learn a bunch of sophisticated behaviors. Get back JSON within the format you need. But because of its "thinking" characteristic, through which this system causes by means of its answer earlier than giving it, you can still get successfully the same info that you’d get outside the good Firewall - so long as you had been paying attention, earlier than DeepSeek deleted its personal solutions.

Note that tokens outdoors the sliding window still affect subsequent phrase prediction. Advanced Code Completion Capabilities: A window size of 16K and a fill-in-the-blank job, supporting venture-stage code completion and infilling tasks. The code for the mannequin was made open-source beneath the MIT license, with an additional license agreement ("DeepSeek license") relating to "open and responsible downstream usage" for the mannequin itself. India is developing a generative AI model with 18,000 GPUs, aiming to rival OpenAI and DeepSeek. Each submitted resolution was allotted both a P100 GPU or 2xT4 GPUs, with up to 9 hours to resolve the 50 problems. They had been trained on clusters of A100 and H800 Nvidia GPUs, connected by InfiniBand, NVLink, NVSwitch. Natural language excels in abstract reasoning but falls quick in precise computation, symbolic manipulation, and algorithmic processing. This strategy combines natural language reasoning with program-based mostly downside-fixing. To harness the benefits of each methods, we implemented this system-Aided Language Models (PAL) or more precisely Tool-Augmented Reasoning (ToRA) strategy, originally proposed by CMU & Microsoft. To prepare the model, we needed a suitable downside set (the given "training set" of this competitors is simply too small for positive-tuning) with "ground truth" options in ToRA format for supervised advantageous-tuning.

Note that tokens outdoors the sliding window still affect subsequent phrase prediction. Advanced Code Completion Capabilities: A window size of 16K and a fill-in-the-blank job, supporting venture-stage code completion and infilling tasks. The code for the mannequin was made open-source beneath the MIT license, with an additional license agreement ("DeepSeek license") relating to "open and responsible downstream usage" for the mannequin itself. India is developing a generative AI model with 18,000 GPUs, aiming to rival OpenAI and DeepSeek. Each submitted resolution was allotted both a P100 GPU or 2xT4 GPUs, with up to 9 hours to resolve the 50 problems. They had been trained on clusters of A100 and H800 Nvidia GPUs, connected by InfiniBand, NVLink, NVSwitch. Natural language excels in abstract reasoning but falls quick in precise computation, symbolic manipulation, and algorithmic processing. This strategy combines natural language reasoning with program-based mostly downside-fixing. To harness the benefits of each methods, we implemented this system-Aided Language Models (PAL) or more precisely Tool-Augmented Reasoning (ToRA) strategy, originally proposed by CMU & Microsoft. To prepare the model, we needed a suitable downside set (the given "training set" of this competitors is simply too small for positive-tuning) with "ground truth" options in ToRA format for supervised advantageous-tuning.

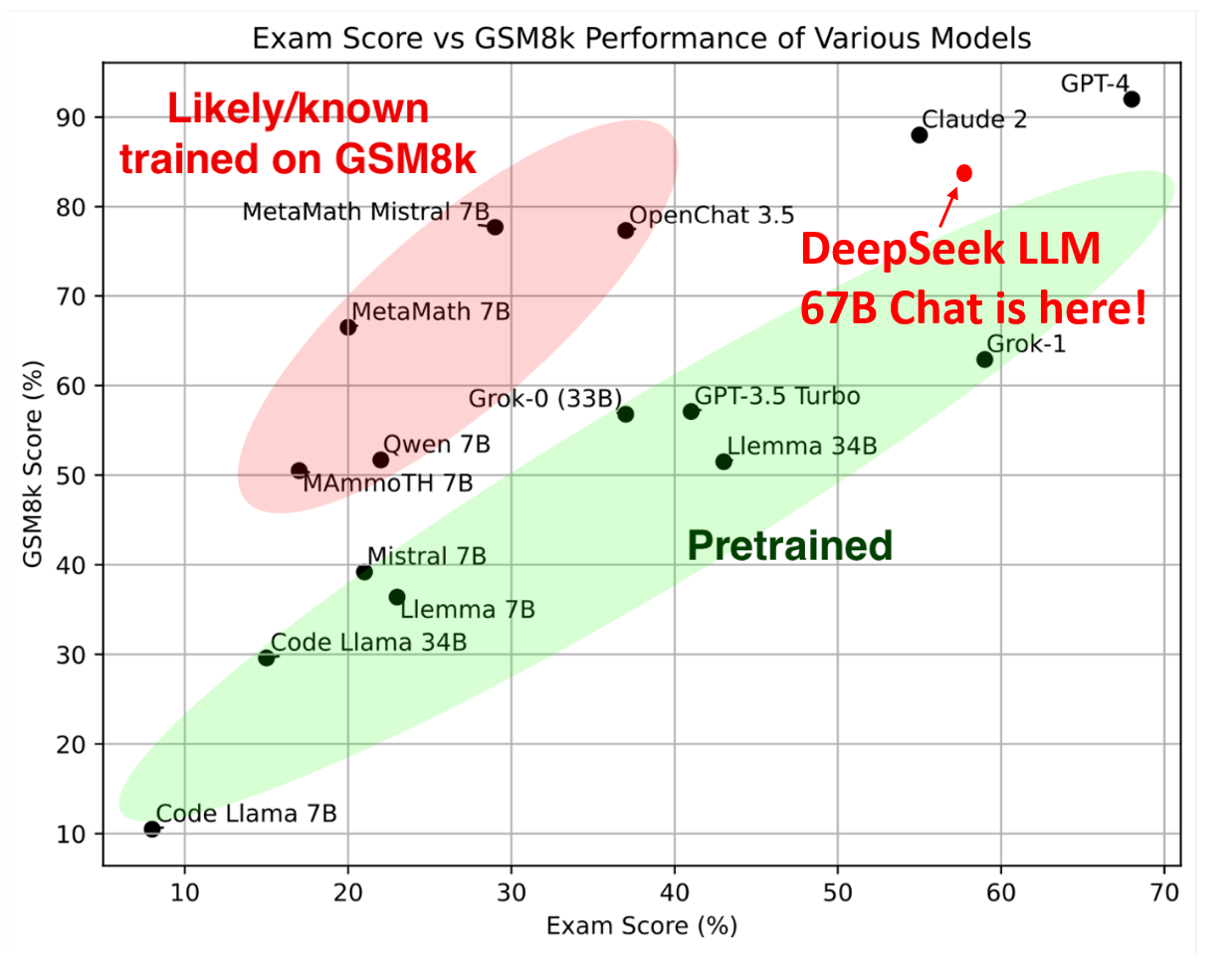

The coverage model served as the primary problem solver in our strategy. Unlike most teams that relied on a single mannequin for the competitors, we utilized a twin-model approach. This method allows for more specialized, accurate, and context-conscious responses, and sets a new customary in handling multi-faceted AI challenges. Normally, the problems in AIMO have been significantly extra challenging than those in GSM8K, a typical mathematical reasoning benchmark for LLMs, and about as troublesome as the hardest problems within the challenging MATH dataset. Our last dataset contained 41,160 drawback-answer pairs. Our remaining options were derived by a weighted majority voting system, which consists of generating a number of options with a policy model, ديب سيك assigning a weight to each resolution utilizing a reward model, and then choosing the reply with the highest whole weight. Our closing solutions have been derived by a weighted majority voting system, where the solutions were generated by the policy mannequin and the weights had been determined by the scores from the reward model.

This technique stemmed from our study on compute-optimal inference, demonstrating that weighted majority voting with a reward mannequin consistently outperforms naive majority voting given the same inference price range. We validate this technique on high of two baseline fashions across different scales. The private leaderboard determined the ultimate rankings, which then determined the distribution of in the one-million dollar prize pool amongst the highest five groups. Then they sat down to play the game. Asked about sensitive matters, the bot would begin to answer, then stop and delete its own work. Given the problem issue (comparable to AMC12 and AIME exams) and the special format (integer answers only), we used a combination of AMC, AIME, and Odyssey-Math as our downside set, eradicating a number of-choice choices and filtering out problems with non-integer solutions. Sometimes these stacktraces might be very intimidating, and a fantastic use case of using Code Generation is to help in explaining the issue.

댓글목록 0

등록된 댓글이 없습니다.